The code for building a reusable CD pipeline with Tekton

(Kubernetes-native CI/CD framework)

On this page, I share the improved code initially taken from the YAML files I worked with during the 'IBM DevOps and Software Engineering' course, after the course I extensively played around with the pipeline, made some polishes to it, and added comments to facilitate understanding of what's going on there.

The files you'll need on your system to create and run the pipeline are as follows:

(1) cd-pipeline.yaml for creating the Continuous Deployment pipeline.(2) cd-tasks.yaml containing a couple of custom tasks used by the pipeline.

(3) cd-pvc.yaml for creating a PersistentVolumeClaim to be used as a workspace by the tasks.

This CD pipeline consists of the following tasks:

(1) Cleaning up the workspace (working directory).(2) Copying (cloning) the source code to it from github.

(3) Linting some python code for a simple counter app.

(4) Unit testing this code.

(5) Building a container image for deployment in a Kubernetes environment.

(6) Deploying to Kubernetes using the OpenShift CLI (the `oc` command).

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cd-pipelinerun-pvc

spec:

storageClassName: skills-network-learner # Change this to the one you use.

resources:

requests:

storage: 1Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce # The volume can be mounted as read-write by a single node.

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: cd-pipeline

spec:

workspaces:

- name: cd-pipeline-workspace

params:

- name: branch # of a git repository.

default: "main"

- name: repo-url # It will hold the URL of a git repository to clone from.

- name: build-image

- name: app-name # It's helpful to have the application name as a parameter

# when reusing this pipeline to deploy different applications.

tasks:

# (1) Preparing the environment:

#

# (the implementation of this task is written in the cd-tasks.yaml file

# and named "cleanup-working-directory")

#

- name: init

workspaces:

#

# A common workspace name that many tasks use for code

# is "source", so we name the workspace "source" for consistency.

# This way, the path to the directory where the actions will

# take place is going to be "/workspace/source".

#

- name: source

workspace: cd-pipeline-workspace

taskRef:

name: cleanup-working-directory

# (2) Copying using the git-clone task from the Tekton catalog:

#

- name: copy

workspaces:

#

# We name it "output" for this task, because this is the workspace name

# that the git-clone task will be looking for:

#

- name: output

workspace: cd-pipeline-workspace

taskRef:

name: git-clone

params:

- name: revision # Revision to checkout (branch in our case).

value: "$(params.branch)"

#

# For the repository URL to clone from the git-clone task

# expects the name to be "url":

#

- name: url

value: "$(params.repo-url)" # This way, the parameter declared in the params section

# near the top of this file can be referenced here.

runAfter:

- init

# (3) Linting Python code using the flake8 task from the Tekton catalog:

#

- name: lint

workspaces:

- name: source

workspace: cd-pipeline-workspace

taskRef:

name: flake8 # The task provides linting based on flake8 for Python.

params:

#

# In the documentation for the flake8 task, you can see that it accepts

# an optional image parameter that allows you to specify your own container image,

# we choose to use "python:3.9-slim":

#

- name: image

value: "python:3.9-slim"

#

# The flake8 task also allows you to specify arguments to pass

# to flake8 using the "args" parameter.

# For example, the arguments --count --statistics

# would be specified as: ["--count", "--statistics"].

#

- name: args

value: ["--count", "--max-complexity=10", "--max-line-length=127", "--statistics"]

runAfter:

- copy

# (4) Unit testing Python code using the flake8 task from the Tekton catalog:

#

# (the implementation of this task is also written in the cd-tasks.yaml file,

# where it is named "nose", as it uses the nosetests)

#

- name: tests

workspaces:

- name: source

workspace: cd-pipeline-workspace

taskRef:

name: nose

params:

- name: args

value: "-v --with-spec --spec-color"

runAfter:

- copy

# (5) Building a container image using the buildah ClusterTask:

#

# (if you don't have the buildah installed as a ClusterTask,

# consider installing it as a regular task, and then remove

# the line: "kind: ClusterTask" from the block of code that follows)

#

- name: build

workspaces:

- name: source

workspace: cd-pipeline-workspace

taskRef:

name: buildah

kind: ClusterTask # Indicates that this is a ClusterTask.

params:

- name: IMAGE

value: "$(params.build-image)"

runAfter:

#

# Specifying both lint and tests allows us to perform

# linting and testing concurrently, and this is fine,

# as both processes do not depend on completion of one

# before starting another:

#

- lint

- tests

# (6) Deploying to Kubernetes by running an OpenShift CLI command

# using the openshift-client ClusterTask:

#

# (as with the previous task, you might consider installing it on your system

# as a regular task if needed and removing the line "kind: ClusterTask")

#

- name: deploy

taskRef:

name: openshift-client

kind: ClusterTask

params:

- name: SCRIPT

value: "oc create deploy $(params.app-name) --image=$(params.build-image)"

runAfter:

- build

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: cleanup-working-directory

spec:

description: This task will clean up a workspace by deleting all of the files.

workspaces:

- name: source

steps:

- name: remove-files-and-directories

image: alpine:3

env:

- name: WORKSPACE_SOURCE_PATH # Sets the environment variable to be used

# in the embedded shell script that follows.

value: $(workspaces.source.path)

workingDir: $(workspaces.source.path) # The root of the workspace.

securityContext:

runAsNonRoot: false

runAsUser: 0 # The root user.

script: |

#!/usr/bin/env sh

set -eu

echo "Removing all files from ${WORKSPACE_SOURCE_PATH} ..."

# Remove any existing contents of the directory if it exists.

#

# We refrain from using "rm -rf ${WORKSPACE_SOURCE_PATH}" directly because ${WORKSPACE_SOURCE_PATH}

# could potentially be "/" or the root of a mounted volume.

if [ -d "${WORKSPACE_SOURCE_PATH}" ] ; then

# Delete non-hidden files and directories

rm -rf "${WORKSPACE_SOURCE_PATH:?}"/*

# Delete files and directories that start with a dot, excluding ..

rm -rf "${WORKSPACE_SOURCE_PATH}"/.[!.]*

# Delete files and directories that start with .. followed by any other character

rm -rf "${WORKSPACE_SOURCE_PATH}"/..?*

fi

---

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: nose

spec:

description: Performs unit testing using nosetests.

workspaces:

- name: source

params:

- name: args

description: Arguments to be passed to nose

type: string

default: "-v"

steps:

- name: nosetests

image: python:3.9-slim

workingDir: $(workspaces.source.path)

script: |

#!/bin/bash

set -e

python -m pip install --upgrade pip wheel

pip install -r requirements.txt

nosetests $(params.args)

When you have all the three YAML files in place on your system, you'll need to apply the configurations they contain using the following CLI commands:

kubectl apply -f cd-tasks.yaml

kubectl apply -f cd-pipeline.yaml

kubectl apply -f cd-pvc.yaml

Next, install the git-clone and flake8 tasks into your Kubernetes namespace (if you haven't done so already):

# Install the git-clone task:

kubectl apply -f https://raw.githubusercontent.com/tektoncd/catalog/main/task/git-clone/0.9/git-clone.yaml

# Install the flake8 task:

kubectl apply -f https://api.hub.tekton.dev/v1/resource/tekton/task/flake8/0.1/raw

To check if you have the buildah and openshift-client tasks installed as ClusterTasks (as I did on my system), you can use this command:

tkn clustertask lsIf you don't, you'll need to install them to complete this endeavor.

For convenience, set the CONTAINER_REGISTRY_NAMESPACE shell variable to a value appropriate for your setup. Soon, it will be used in the shell command that starts the pipeline.

You can copy and paste the following line, replacing your-cr-namespace with your value:

CONTAINER_REGISTRY_NAMESPACE=your-cr-namespace

Note: the source code of the application to be deployed will be cloned from:

https://github.com/ibm-developer-skills-network/wtecc-CICD_PracticeCode.git

Now that everything is ready, it's time to run the pipeline!

tkn pipeline start cd-pipeline \

-p repo-url="https://github.com/ibm-developer-skills-network/wtecc-CICD_PracticeCode.git" \

-p branch="main" \

-p build-image="image-registry.openshift-image-registry.svc:5000/$CONTAINER_REGISTRY_NAMESPACE/tekton-lab:latest" \

-p app-name=simplecounter \

-w name=cd-pipeline-workspace,claimName=cd-pipelinerun-pvc \

--showlog

Note:

The -p sets the value for a parameter that will be available when the pipeline runs.

The -w specifies a workspace to use.

The -w specifies a workspace to use.

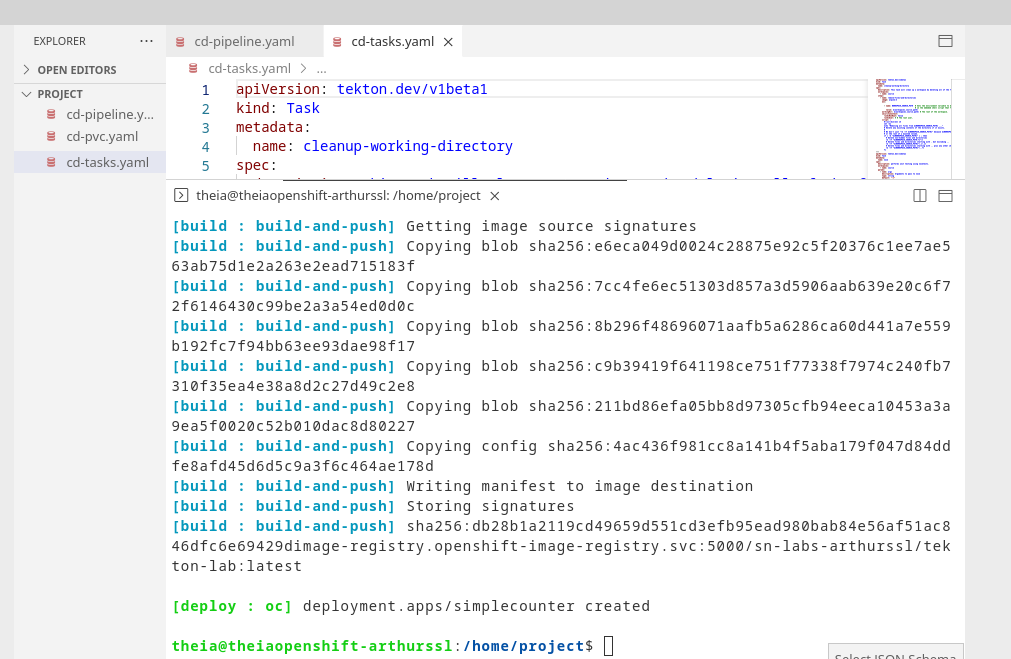

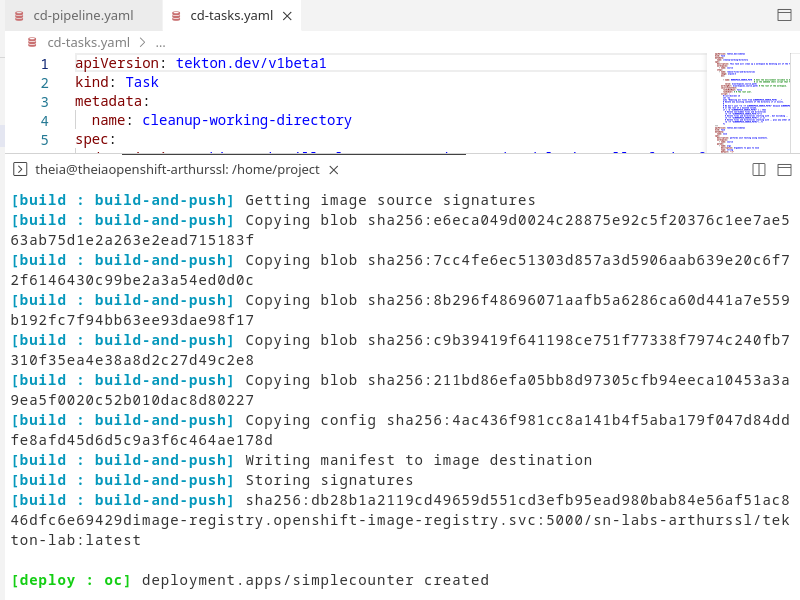

The final output should look similar to the one in the lower pane here:

You can see the pipeline run status by listing the PipelineRuns with:

tkn pipelinerun ls

To check the logs of the last run, use:

tkn pipelinerun logs --last

This page was last updated on May 29, 2025